Scientific Computing and Data / High Performance Computing / Hardware and Technical Specs

Hardware and Technical Specs

The Minerva supercomputer is maintained by High Performance Computing (HPC). Minerva was created in 2012 and has been upgraded several times (most recently in Nov. 2024) and has over 11 petaflops of compute power. It consists of 24,912 Intel Platinum processors in different generations including 2.3 GHz, 2.6 GHz, and 2.9 GHz compute cores (96 cores or 64 cores or 48 cores per node with two sockets in each node) with 1.5 terabytes (TB) of memory per node, 356 graphics processing units (GPUs), including 236 Nvidia H100 GPUs, 32 Nvidia L40S servers, 40 Nvidia A100 GPUs, 48 Nvidia V100 GPUs, 440 TB of total memory, and 32 petabytes of spinning storage accessed via IBM’s Spectrum Scale/General Parallel File System (GPFS). Minerva has contributed to over 1,900 peer-reviewed publications since 2012. The Minerva cluster design is driven by the research demand performed by Minerva users (i.e. the number of nodes, the amount of memory per node, and the amount of disk space for storage).

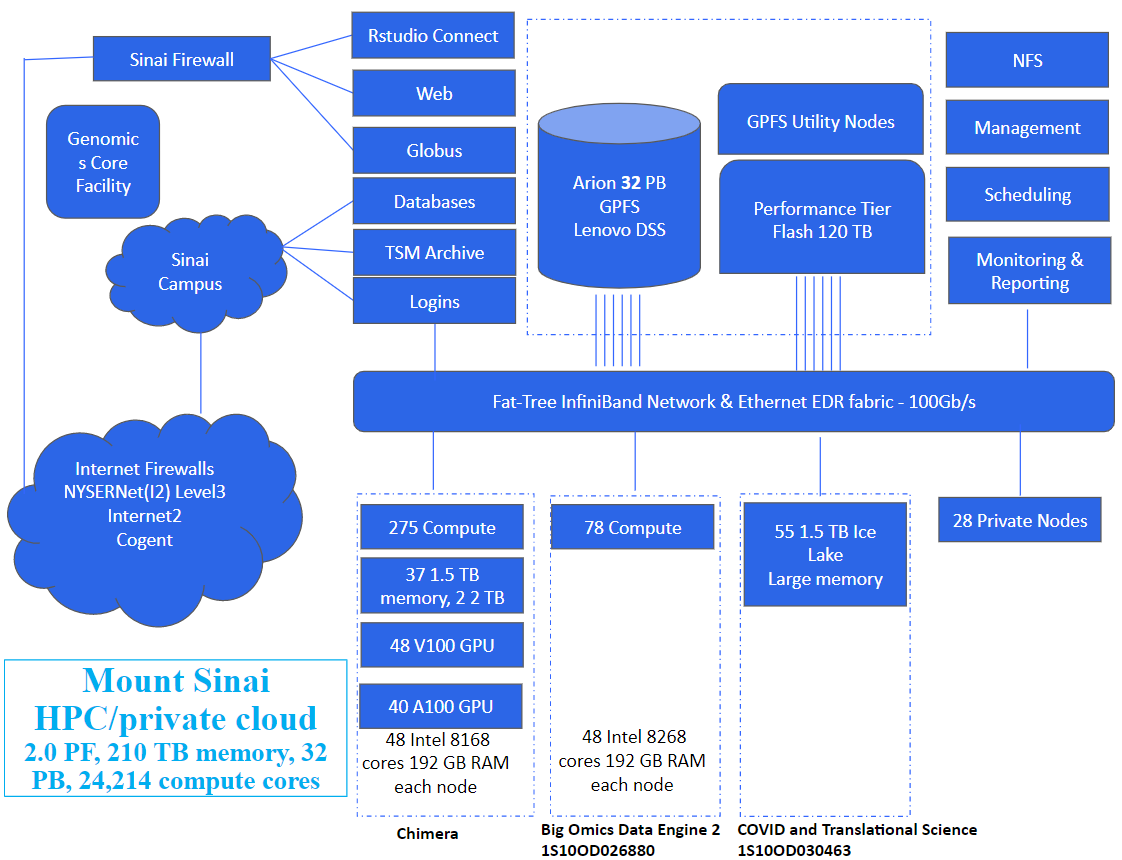

The following diagram shows the overall Minerva configuration.

Compute Nodes

Chimera Partition

- 4 login nodes – Intel Xeon(R) Platinum 8168 24C, 2.7GHz – 384 GB memory

- 275 compute nodes* – Intel 8168 24C, 2.7GHz – 192 GB memory

- 13,152 cores (48 per node (2 sockets/node))

- 37 high memory nodes – Intel 8168/8268 24C, 2.7GHz/2.9GHZ – 1.5 TB memory

- 48 V100 GPUs in 12 nodes – Intel 6142 16C, 2.6GHz – 384 GB memory – 4x V100-16 GB GPU

- 32 A100 GPUs in 8 nodes – Intel 8268 24C, 2.9GHz – 384 GB memory – 4x A100-40 GB GPU

- 1.92TB SSD (1.8 TB usable) per node

- 8 A100 GPUs in 2 nodes – Intel 8358 32C, 2.6GHz – 2 TB memory – 4x A100-80 GB GPU

- 7.68 TB NVMe PCIe SSD (7.0TB usable) per node, which can deliver a sustained read-write speed of 3.5 GB/s in contrast with SATA SSDs that limit at 600 MB/s

- The A100 is connected via NVLink

- 10 gateway nodes

- New NFS storage (for users home directories) – 192 TB raw / 160 TB usable RAID6

- Mellanox EDR InfiniBand fat tree fabric (100Gb/s)

*Compute Node —where you run your applications. Users do not have direct access to these machines. Access is managed through the LSF job scheduler.

BODE2 Partition

$2M S10 BODE2 awarded by NIH (Kovatch PI)

- 3,744 48-core 2.9 GHz Intel Cascade Lake 8268 processors in 78 nodes

- 192 GB of memory per node

- 240 GB of SSDs per node

- 15 TB memory (collectively)

- Open to all NIH funded projects

CATS Partition

$2M CATS awarded by NIH (Kovatch PI)

- 3,520 64-core 2.6 GHz Intel IceLake 8358 processors in 55 nodes

- 1.5 TB of memory per node

- 82.5 TB memory (collectively)

- Open to eligible NIH funded projects

Private Nodes

Purchased by private groups and hosted on Minerva.

In summary,

Total system memory (computes + GPU + high mem) = 210 TB

Total number of cores (computes + GPU + high mem) = 24,214 cores

Peak performance (computes + GPU + high mem, CPU only) = 2 PFLOPS

File System Storage

For Minerva, we focused on parallel file systems because NFS and other file systems simply cannot scale to the number of nodes or provide performance for the sheer number of files that the genomics workload entails. Specifically, Minerva is using IBM’s General Parallel File System (GPFS) because it has advantages that are specifically useful for this workload such as parallel metadata, tiered storage, and sub-block allocation. Metadata is the information about the data in the file system. The flash storage is utilized to hold the metadata and tiny files for fast access.

Currently we have one parallel file system on Minerva, Arion, which users can access at /sc/arion. The Hydra file system was retired at the end of 2020.

| GPFS Name | Lifetime | Storage Type | Raw PB | Usable PB |

| Arion | 2019 – | Lenovo DSS | 14 | 9.6 |

| Arion | 2019 – | Lenovo G201 flash | 0.12 | 0.12 |

| Arion | 2020 – | Lenovo DSS | 16 | 11.2 |

| Arion | 2021 – | Lenovo DSS | 16 | 11.2 |

| Total | 46 | 32 |

Supported by grant UL1TR004419 from the National Center for Advancing Translational Sciences, National Institutes of Health.

Acknowledging Mount Sinai in Your Work

Utilizing S10 BODE and CATS partitions requires acknowledgements of support by NIH in your publications. To assist, we have provided exact wording of acknowledgements required by NIH for your use. Click here for acknowledgements.