Scientific Computing and Data / High Performance Computing / Policies / Disaster Recovery Plan

Policies

- Acknowledgements

- Acceptable Use

- Consulting Services

- Fee and Schedule Policy

- Disaster Recovery Plan

- HIPAA

- Mount Sinai Code of Conduct

Disaster Recovery Plan

- The Scientific Computing Facility consists of the Minerva supercomputer. Scientists in the medical school use this facility for research only.

- The Scientific Computing facility is in the process of developing a Disaster Recovery Plan, outlining policies, procedures, and responsibilities to recover IT systems and data in the event of a disaster, ensuring research continuity.

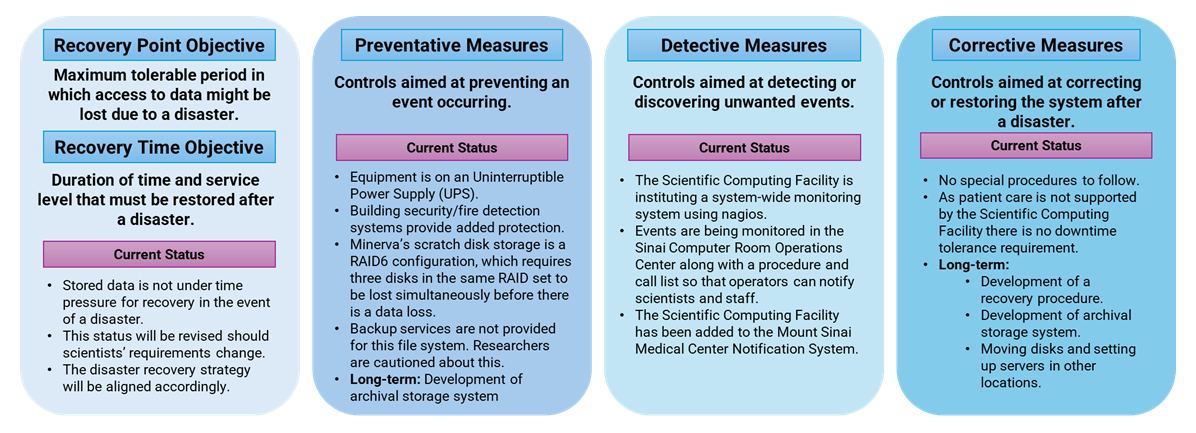

- The Plan consists of four parts: the recovery point and time objectives, preventative, detective and corrective measures and is described in greater detail in the figure below.

- This plan will be updated twice a year, evolving in response to updated requirements and new resources.